10 AI Bias Mitigation Strategies to Implement Today

.avif)

.png)

10 AI Bias Mitigation Strategies to Implement Today

Artificial intelligence (AI) models now guide many decisions that affect daily life, including sensitive decisions in hiring, lending, pricing, and eligibility. As the adoption of AI and large language models (LLMs) expands, concerns about how these systems are trained and validated have grown. When input data lacks balance or context, algorithms can produce outcomes that disadvantage some groups or misrepresent actual conditions.

Unfortunately, AI bias is a pervasive issue. A groundbreaking study found that up to 38.6% of ‘facts’ generated by AI models were, in some way, biased. That’s why AI bias mitigation strategies are critical. They provide a structured approach to detecting and reducing bias during model development. Since bias can appear at any point in the AI lifecycle, mitigation must be continuous and supported by clear checkpoints. Improving visibility into data collection and model behavior helps teams detect drift and maintain model accuracy, which limits bias.

Effective AI bias mitigation starts with dependable and current data. Verified and regularly updated information allows teams to identify model drift early so they can retrain models before their fairness or reliability declines. This approach also limits compliance exposure as governance standards evolve. Let’s look at ten AI bias mitigation strategies that help teams design systems built on fairness and accountability.

What is AI bias, and what are mitigation strategies?

It refers to the systematic unfairness that occurs when models produce results influenced by skewed data, design choices, or social and contextual assumptions. It often begins in the data used to train a model, but can also result from how problems are framed or how algorithms weigh specific inputs.

Bias in AI is related to the concept of fairness, which refers to whether a model produces outcomes that treat comparable individuals or groups equally in similar situations. When a model applies different outcomes to groups who should be receiving equivalent decisions, the results are considered unfair and indicate that bias is present.

Here’s what AI bias looks like in the wild:

- A hiring model trained primarily on past employee data may perpetuate historical imbalances in gender or education, leading to unfair outcomes for certain groups.

- A retail pricing model built on digital shelf analytics may produce higher prices for specific communities if location data is treated as a primary input.

In both cases, bias reduces fairness, can undermine accuracy for specific groups, and increases regulatory exposure under frameworks such as the EU AI Act and GDPR.

Because bias can enter a system at many points, eliminating it is rarely possible. Data and analytics teams address this challenge through AI bias mitigation strategies, which are methods that detect and reduce bias while improving consistency, transparency, and fairness in model performance.

10 AI Bias Mitigation Strategies by Category

These ten strategies, organized across four categories, introduce checkpoints throughout the AI lifecycle to identify biases and measure results against defined fairness metrics. Applied with discipline, they turn responsible AI into a measurable process that supports compliance and operational reliability.

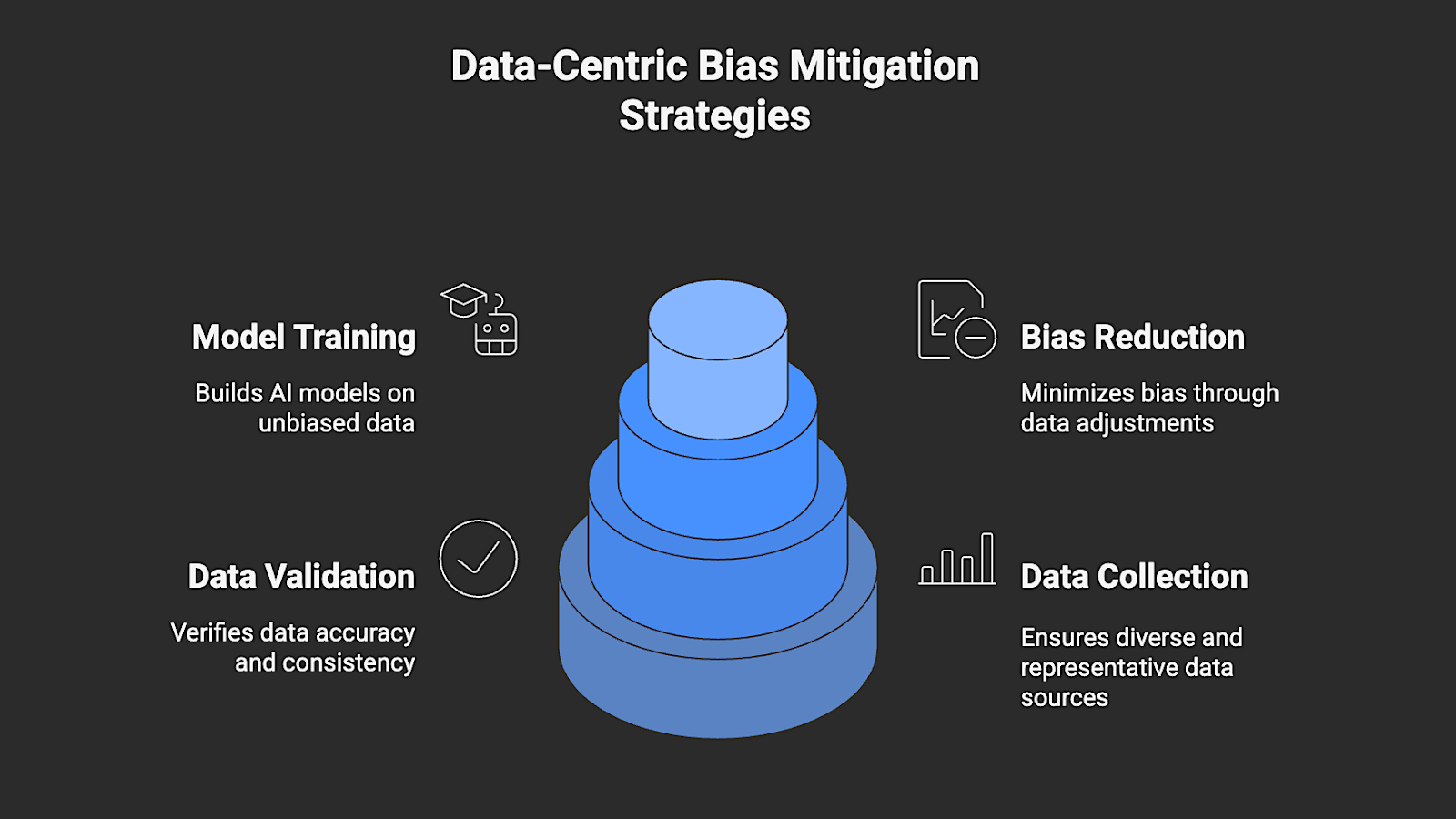

Data-Centric Strategies

Data-centric AI bias mitigation strategies focus on the source of model behavior: the data pipeline. They improve how information is collected and validated so that it better represents the environments where AI systems operate. Strengthening data quality at this stage reduces the likelihood of bias later in training and allows every subsequent mitigation step to build on a consistent, reliable foundation.

1. Collect Diverse and Representative Data

AI models perform best when they’re trained on data that mirrors the full range of real-world conditions. Expanding AI datasets to include different regions, demographics, and contexts reduces two key model risks:

- Learning patterns that reflect only the most common groups.

- Producing biased outcomes.

Over time, though, even well-balanced datasets may lose relevance as markets shift and user behavior changes. Addressing this issue requires continuous data collection that reflects new patterns and preserves diversity.

Nimble’s Web Search Agents support this process by gathering global, real-time public data, which keeps datasets current and representative of real-world conditions.

2. Apply Rigorous Data Preprocessing and Bias Detection

Model learning can be distorted by large datasets that contain imbalances or labeling errors. Rigorous preprocessing uses statistical diagnostics to detect bias before training. It allows teams to identify issues such as uneven data distributions or labeling errors that can skew results.

Cleaning and rebalancing at this stage prevents those distortions from influencing predictions later on. The validation and cleaning layers within Nimble’s data processing workflow

provide clean, validated, compliant, structured datasets that support bias checks.

3. Use Data Augmentation (including Synthetic or Privacy-Preserving Methods)

In many fields where AI is applied, some data categories or user groups are often underrepresented. Controlled augmentation helps correct that imbalance by generating additional, statistically plausible examples.

Synthetic data and simulation techniques expand dataset representation when new data collection is limited. Two common privacy-preserving approaches help protect sensitive information during this process:

- Federated learning trains a model across multiple data sources without moving the underlying data to a central location, reducing exposure of sensitive records.

- Differential privacy adds controlled noise to data or model outputs. This prevents individual records from being identified, even when aggregated information is shared.

On the whole, both of these techniques reduce bias without compromising privacy or compliance. They provide a more balanced and relevant dataset for models to learn from.

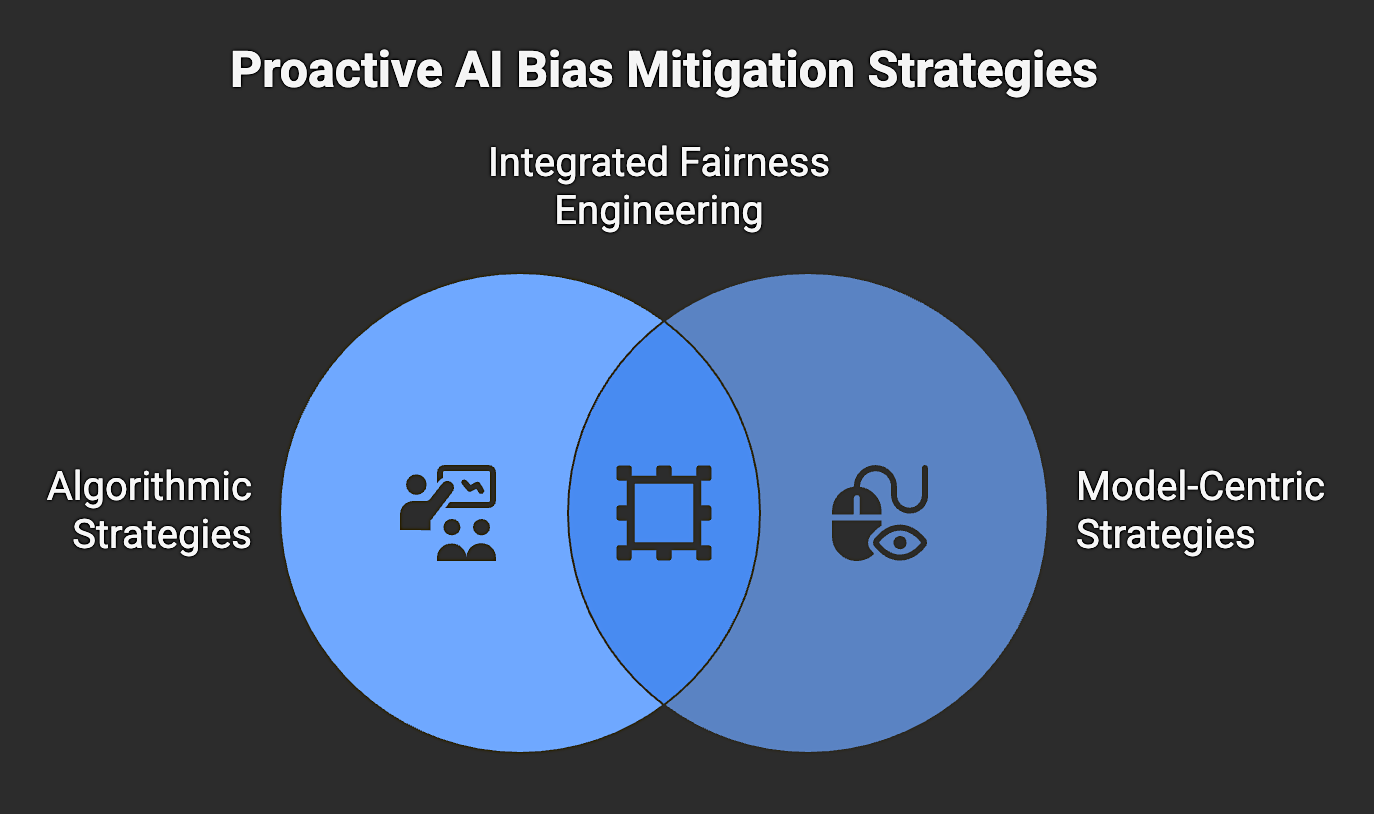

Algorithmic and Model-Centric Strategies

Algorithmic and model-centric AI bias mitigation strategies address bias within the design and training of AI systems. They modify how models learn and how their performance is evaluated. Building fairness objectives directly into modeling workflows allows your teams to detect and correct unequal outcomes before they reach production.

4. Embed Fairness-by-Design from Development to Deployment

Fairness needs to be defined and measured throughout the model development process. The best way to accomplish this is to set clear objectives and constraints during labeling, training, and validation, which helps track how fairness changes across model versions.

To ensure that your models meet defined standards before they are released, the best practice is to require fairness scoring as a condition for deployment. This step builds accountability into your entire workflow and makes fairness a measurable part of model quality.

5. Implement Algorithmic Fairness Techniques

Algorithmic fairness techniques modify how models learn to reduce bias in predictions.There are a few common methods during training and evaluation to correct disparities between groups by reshaping how your AI model prioritizes features or outcomes:

- Adversarial debiasing – A ML technique that uses a competitive training process between two neural networks to reduce bias in a model.

- Counterfactual fairness – Assesses if a model's prediction for an individual would be the same if their sensitive attributes (like race, gender, or age) were different, while all other relevant factors stayed the same.

- Reweighing – A preprocessing technique used to mitigate bias in a dataset by assigning different weights to training samples.

- Open-source libraries – Monitor and quantify fairness across demographic subgroups over time. Examples include Fairlearn and AIF360.

6. Adopt Fairness-Aware Optimization and Evaluation

AI optimization processes often prioritize accuracy over fairness. Unfortunately, a side effect of optimization is that it sometimes creates unequal performance across different groups. Fairness-aware optimization modifies loss functions or introduces fairness constraints to ensure consistent performance across groups defined by protected features.

Continuous retraining and evaluation are needed to sustain these improvements as new data enters the system. Using real-time, high-fidelity data enables this process by providing up-to-date inputs that support continuous re-optimization and monitoring of fairness over time, especially in large-scale AI and LLM training environments.

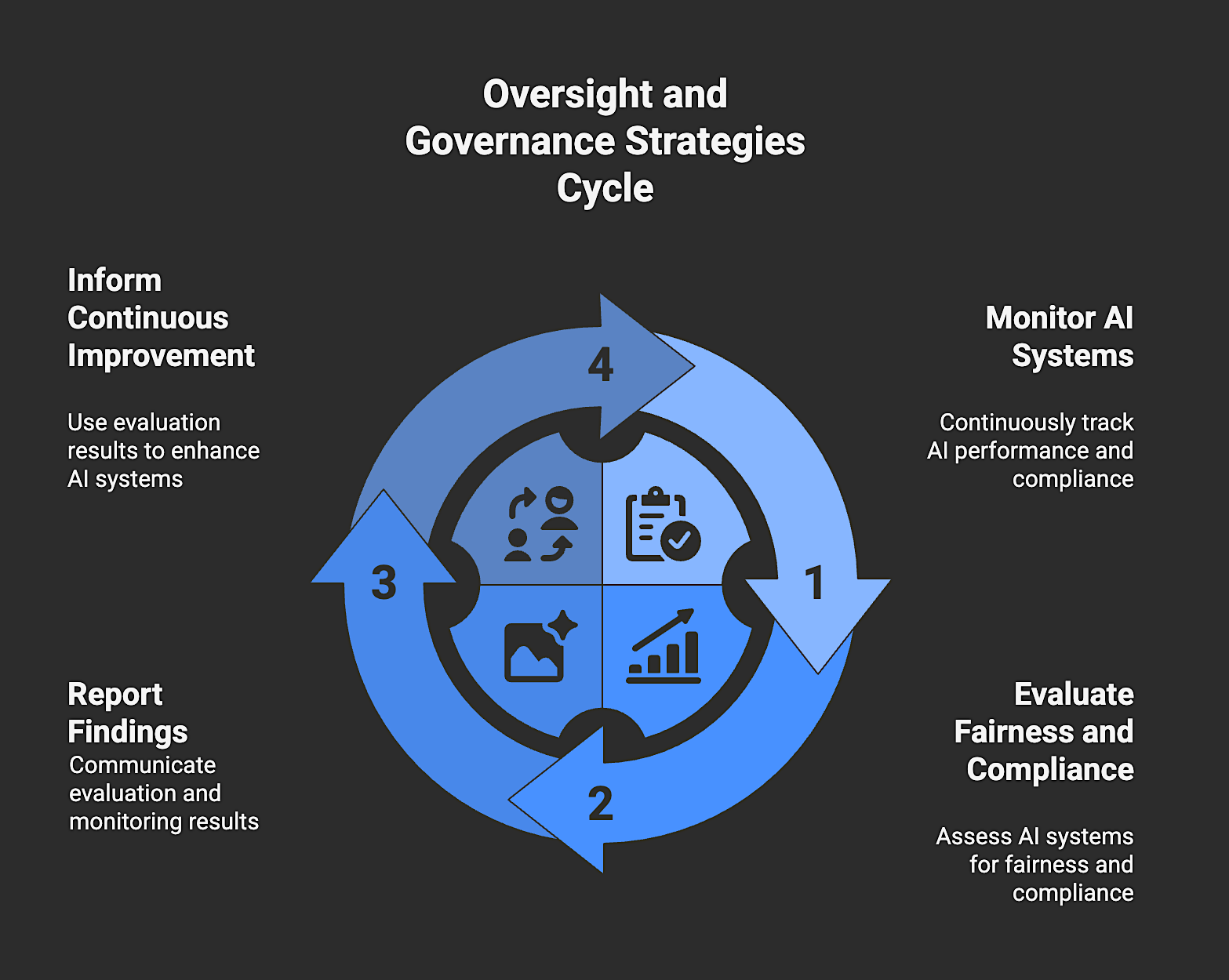

Oversight and Governance Strategies

Oversight and governance strategies maintain accountability across AI companies

through structured monitoring and control. They define how fairness and compliance are evaluated and how those results inform continuous improvement. This approach keeps fairness oversight active throughout the model’s lifecycle rather than treating it as a one-time requirement before deployment.

7. Conduct Regular Bias Impact Assessments and Model Audits

Bias impact assessments measure how model decisions affect different groups under real operating conditions. They are used alongside regular model audits to identify whether fairness declines as data or user behavior changes. Teams perform bias scoring before and after deployment, review those results independently, and compare them against established benchmarks.

Feedback loops then connect findings back to development, prompting retraining or recalibration when discrepancies emerge. These practices create a consistent record of fairness results that support accountability and compliance. Alongside fairness and bias evaluations, teams should run dedicated AI security testing for LLMs and agents to catch issues like prompt injection, model exfiltration, and insecure inference APIs.

8. Develop Strong AI Governance and Ethical Frameworks

Governance defines how an organization manages fairness, accountability, and access to sensitive data across its AI systems. Leadership and compliance teams must create formal governance policies that specify:

- Who owns model oversight

- How fairness issues are reported

- What procedures guide their resolution

These policies provide decision-making structure and transparency throughout a model’s development and deployment.

An ethical framework complements governance by outlining the organization’s guiding principles for responsible AI, including transparency, proportionality, and human oversight. Established frameworks, such as the NIST AI Risk Management Framework and ISO 42001, provide organizations with clear standards for implementing these principles in practice.

Together, governance and ethics align AI programs with regulatory requirements such as GDPR and CCPA. Nimble’s compliance-first data collection approach supports this alignment by maintaining traceable and auditable sourcing methods across all data pipelines.

9. Prioritize Transparency, Documentation, and Explainability

Transparency depends on visibility into how data and models influence decisions. To help your teams trace outputs back to their origins (and understand how performance changes over time), maintain detailed records of:

- Model configurations

- Data sources

- Preparation steps

Explainability builds on that foundation by revealing which inputs drive model predictions and how decision logic operates. These insights help your technical and compliance teams identify potential bias and validate that model behavior aligns with organizational standards.

To simplify this process, use a data provider that offers structured outputs, including metadata that links the data pipeline to specific model outcomes. This setup makes it easier to document lineage and explain results during audits or internal reviews.

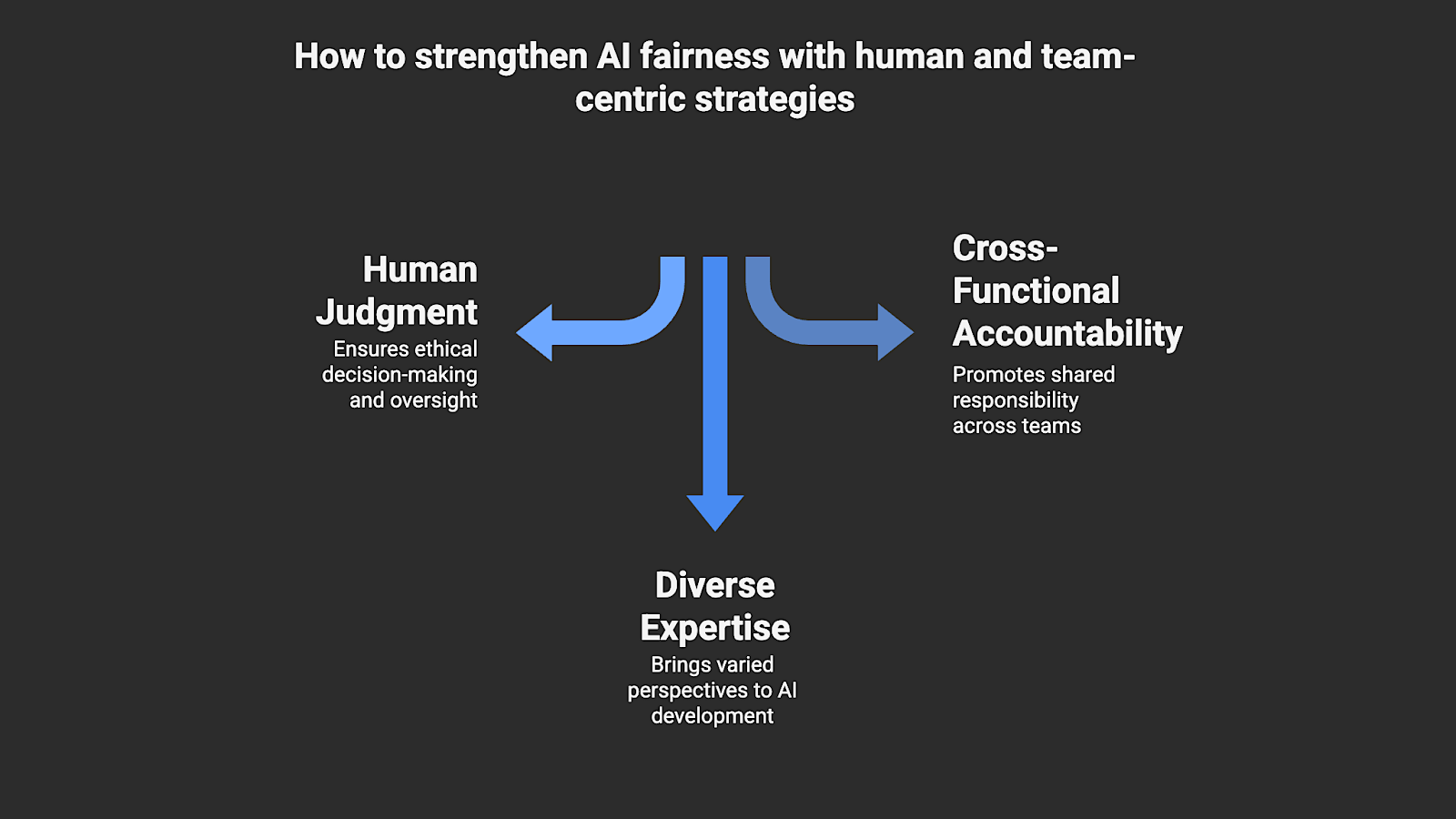

Human and Team-Centric Strategies

Human and team-centric strategies strengthen AI fairness through human judgment, diverse expertise, and cross-functional accountability. These approaches require ongoing human involvement to ensure oversight and shared responsibility across teams. Having humans in the AI loop ensures that technical controls are supported by informed judgment and ethical decision-making.

10. Integrate Human-in-the-Loop Oversight and Build Diverse Teams

This strategy embeds human review directly into AI decision workflows. To do this right, have teams with diverse expertise assess model outputs in critical processes, then validate them against your organization’s established guidelines to confirm that results remain accurate and representative.

Their feedback identifies where models drift from expected behavior and provides input for retraining or adjustment. Employing transparent and traceable data pipelines makes this oversight efficient and scalable by showing reviewers how data is collected, processed, and used throughout model development.

Mitigate AI Bias to Build Fairness That Lasts with Nimble

AI bias mitigation is now a requirement for responsible AI. Treating mitigation as a continuous process protects accuracy and trust, but effective mitigation begins with the data that powers every model decision. Fairness and accuracy depend on dependable data and consistent human oversight to keep models aligned with regulatory expectations. Without current, representative, and compliant information, even well-designed systems can drift toward bias over time.

Nimble provides a reliable foundation of high-quality, current, and compliant data that enhances AI bias mitigation efforts. Its Web Search Agents collect and deliver real-time, structured web data that enables organizations to detect and mitigate bias continuously, retrain models responsibly, and meet compliance standards with confidence.

Book a demo of Nimble to explore how high-quality, compliant data supports responsible, bias-aware AI at scale.

FAQ

Answers to frequently asked questions

.webp)

%20(1).webp)

.png)