Feeding AI Agents Real-Time Web Data with Nimble MCP

Feeding AI Agents Real-Time Web Data with Nimble MCP

AI Agents are an incredible innovation. They harness the intelligence and reasoning capabilities of modern AIs, but apply it in a completely new vector of planning, automation, reaction, and process management. While agentic workflows have tremendous potential, they often produce outdated or inaccurate results,and it’s rarely because the reasoning model broke.

It’s because the data fueling the model stopped reflecting reality.

Because of their autonomy, Agents need live, trustworthy information in order to work predictably and reliably. That’s what Nimble’s integration with Anthropic’s Model Context Protocol (MCP) provides: a way for Agents to pull real-time structured web data as they need it.

The Data Problem Behind AI Agent Inaccuracy

The volume of data needed for AI to work effectively means that data inputs are often long-interval datasets, cached information, or search engine results that are only clips of the full picture. These data sources quickly go out of date, and may miss key data points that could have a detrimental impact on agent accuracy.

The web makes this even harder. Websites are dynamic. They render content on the client side, personalize pages per location, and change layouts often. Traditional scrapers and one-off APIs can’t keep up. They break, or worse, silently miss information.

Without fresh and structured data, agents drift. Reliability depends on keeping three things in sync: freshness, structure, and accuracy. Lose one, and the others follow. What’s needed is a dependable, automated way to stream structured web context into the agent’s decision process.

From Episodic Data Collection to Continuous Intelligence

Most data pipelines are still built for batch collection. They pull snapshots of information at fixed intervals, which works well enough for historical analysis. but not for autonomous systems that need to make decisions in real time.

Teams building agents, recommendation systems, or analytics models are now looking for a steady flow of reliable, governed data instead of intervalled exports.

Real-time data only matters if it’s trustworthy. A live pipeline must validate and structure information, not just deliver it faster. Without governance, streaming becomes noise, or worse - a compliance nightmare.

The Model Context Protocol (MCP)

The Model Context Protocol, or MCP, is a new framework from Anthropic that defines how agents connect to external data and tools. Instead of treating every integration as a custom task, MCP provides a standard way to discover and call data sources.

It turns contextual data into something agents can fetch precisely as they need it. With MCP, a model can query a connected service, get a structured response, and use that data to refine its reasoning. The process is transparent, repeatable, and secure.

This protocol is already reshaping how multi-agent systems are built by defining a shared language for tools and data, making it easy for any compliant client to add new capabilities without rebuilding integrations from scratch.

Extending MCP for Real-Time Web Intelligence with Nimble

The Nimble MCP Server connects MCP-compatible agents to Nimble’s Web Search Agents; specialized, browser-based systems that can navigate, render, and capture real web content at scale. This gives agents a direct path to accurate, structured, real-time data.

Here’s what happens behind the scenes:

- The agent sends a query through MCP, such as “Find current laptop prices across major US retailers.”

- The Nimble MCP Server receives the request and passes it to a Web Search Agent.

- The agent loads live sites in a real browser environment, paginates, renders JavaScript, captures network calls, and extracts the relevant data.

- Nimble normalizes the results into a clean schema and returns them as structured JSON.

- The MCP client incorporates that data into the agent’s reasoning process.

This setup turns the open web into a structured data layer that any MCP-compatible environment can use. No brittle scripts, no maintenance overhead, and no data drift.

Connecting Nimble MCP to an Agent Environment

To show what this looks like in practice, let’s connect Claude Desktop to the Nimble MCP Server and run a live query.

Step 1: Configure the Client

Claude Desktop talks to Nimble’s remote MCP server. There is no need to run a local server.

Edit the configuration file at ~/.claude_desktop/config.json:

s that act on verified information instead of assumptions.

{

"mcp_servers": {

"nimble": {

"command": "npx",

"args": [

"-y", "mcp-remote@latest",

"https://mcp.nimbleway.com/sse",

"--header", "Authorization:${NIMBLE_API_KEY}"

],

"env": {

"NIMBLE_API_KEY": "Bearer YOUR_API_KEY"

}

}

}

}

Restart Claude Desktop to load the new connection.

Step 2: Query the Web

Ask Claude:

“Get current pricing and availability for MacBook Pro 14-inch across major US retailers.”

Here’s what happens:

- Claude calls tools/list to see which MCP tools are available.

- It selects nimble_run_search_agent and sends the request with the required arguments.

- The Nimble MCP Server runs the delegated search and returns structured results from the live web.

Step 3: Python Example

Below is a minimal example of calling Nimble MCP from a Python client. First, list available templates with nimble_find_search_agent, then execute the chosen template with nimble_run_search_agent. Note the correct argument names.

import asyncio, os

from mcp.client.stream import SSETransport

from mcp.client import Client

async def main():

api_key = os.getenv("NIMBLE_API_KEY")

if not api_key:

raise RuntimeError("Set NIMBLE_API_KEY in your environment")

transport = SSETransport(

"https://mcp.nimbleway.com/sse",

headers={"Authorization": f"Bearer {api_key}"}

)

async with Client(transport) as client:

# 1) List tools and confirm availability

tools = await client.list_tools()

print("Available tools:", [t.name for t in tools.tools])

# 2) Discover relevant templates for retail product pricing

find_resp = await client.call_tool(

"nimble_find_search_agent",

{

"domain": "ecommerce",

"entity_type": "product_pricing"

}

)

# Choose a template number from the response

# For demonstration, pick the first

template_number = find_resp["templates"][0]["template_number"]

print("Selected template_number:", template_number)

# 3) Execute the search agent with required args

run_resp = await client.call_tool(

"nimble_run_search_agent",

{

"input": "MacBook Pro 14-inch",

"template_number": template_number

}

)

print("Run response:", run_resp)

if __name__ == "__main__":

asyncio.run(main())

This script connects to the remote MCP endpoint, discovers a pre-trained template, and invokes it correctly using input and template_number. The result is structured JSON containing live pricing and availability data that an agent can immediately use.

Available Tools in Nimble MCP

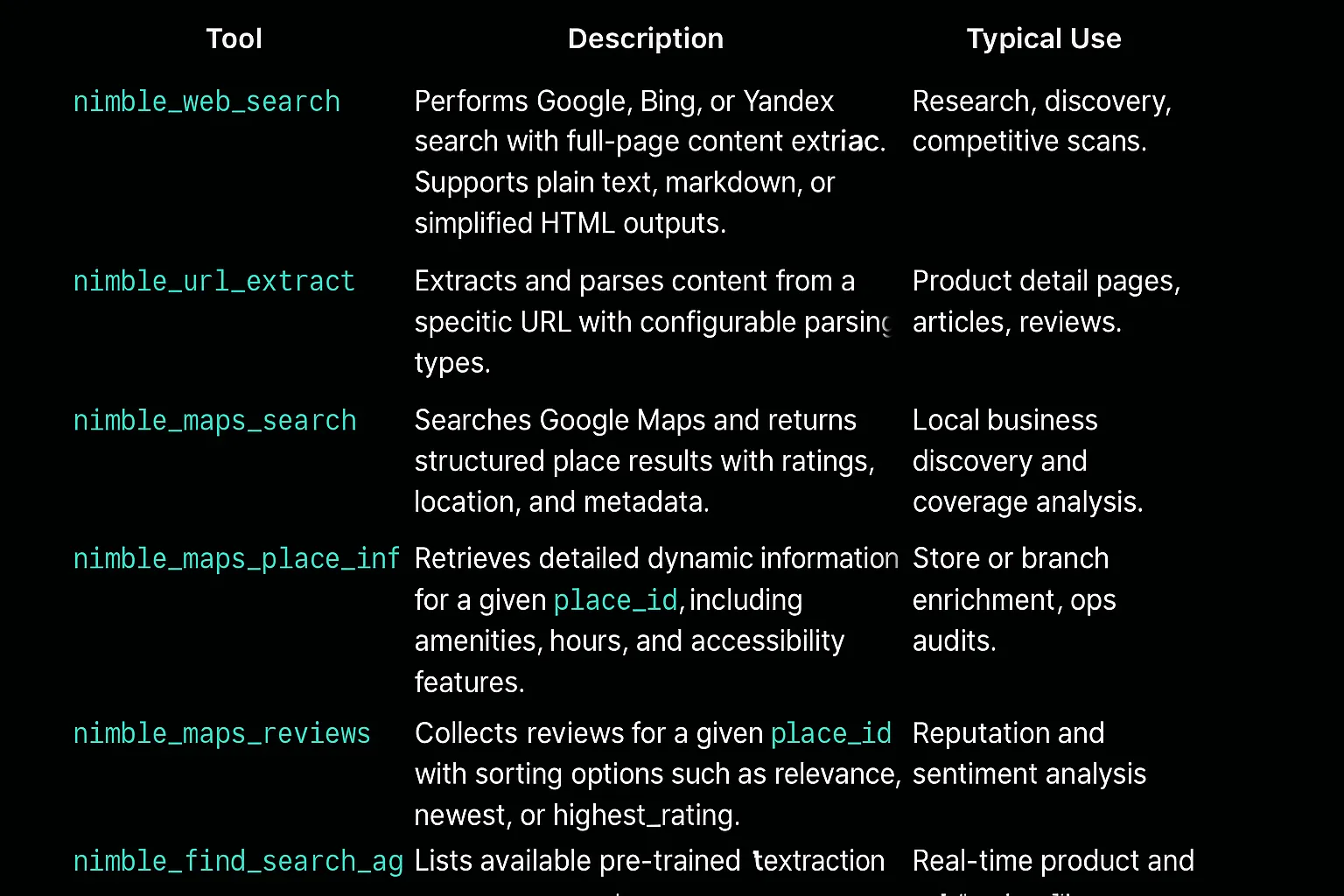

The Nimble MCP Server provides several built-in tools for different web intelligence tasks. Names and capabilities are current.

Each tool adheres to MCP standards and outputs governed, schema-consistent JSON. Agents call these tools via tools/list and tools/call, selecting the right capability for the task at hand.

Why It Matters

For developers and AI builders, this integration changes how agents use data. MCP standardizes access to external sources. Nimble makes those sources live, structured, and production-grade.

Instead of relying on cached datasets or third-party summaries, agents can query the open web directly through a governed interface. The data they receive is validated and ready to feed models, analytics, or downstream workflows.

It is a step toward agents that observe the world rather than recall it, and systems that act on verified information instead of assumptions.

FAQ

Answers to frequently asked questions

.avif)

.webp)

.png)