Enabling Your Agents to Search, Extract, and Structure the Web

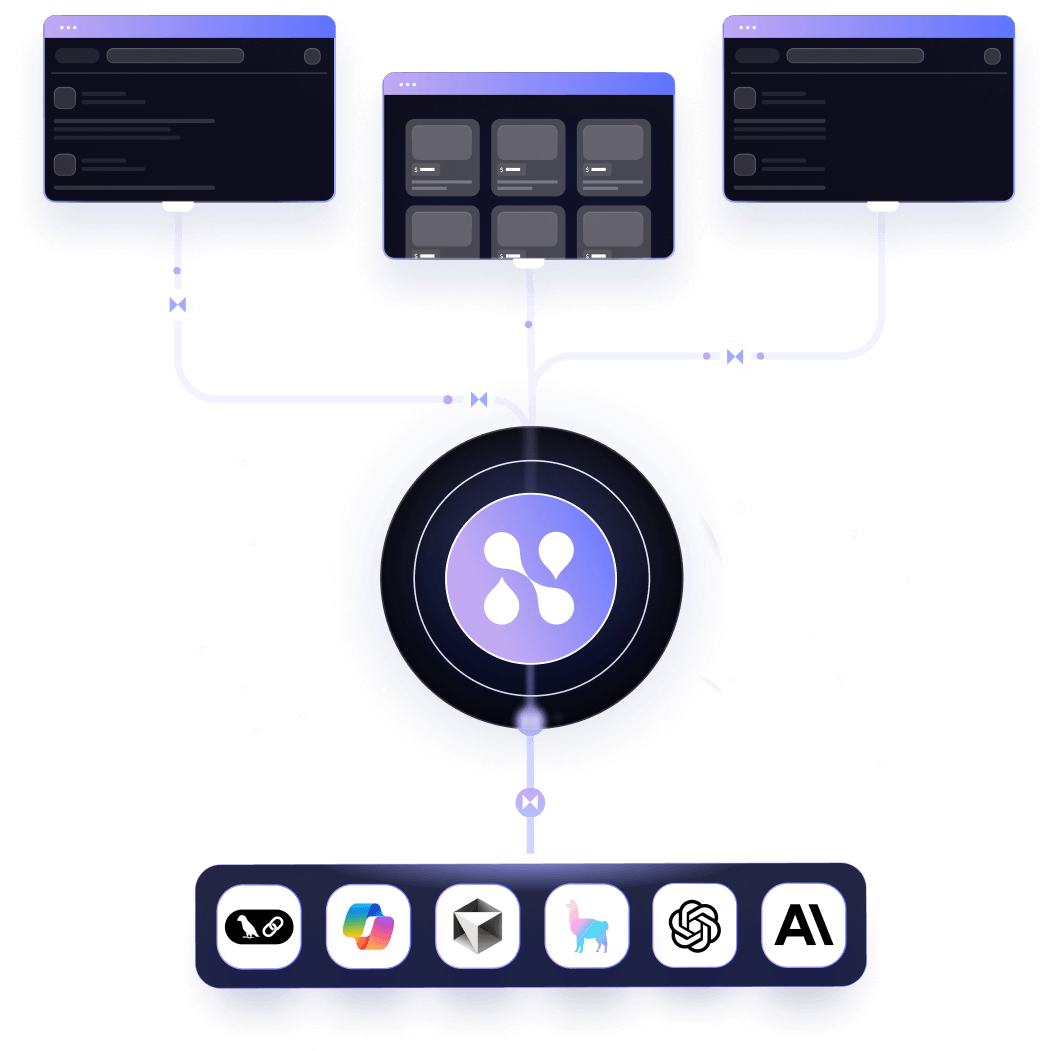

Nimble’s MCP infrastructure includes a suite of tools designed to support intelligent, autonomous reasoning:

nimble_deep_web_search

Scrape real-time web content from major search engines

nimble_extract

Extract content from a specific URL

nimble_google_maps_search

Discover and analyze local businesses

nimble_google_maps_reviews

Retrieve detailed customer reviews

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

%20(1).png)

%20(1).webp)