8 Steps to Success with LLM Training

.png)

8 Steps to Success with LLM Training

.png)

What’s the point of deploying a Large Language Model (LLM) if it produces unreliable answers as soon as market conditions or customer behavior change? Many organizations are rushing LLMs into production, but the training behind them is often incomplete. They rely on static datasets, skip the preparation work, and then face model drift or bias once the system is in use.

Proper LLM training prevents these failures by shaping a model to handle real tasks and customers. Done well, it improves accuracy, reduces bias, and keeps outputs aligned with business objectives. Deloitte recently reported that 79% of business leaders expect generative AI to reshape their organizations within three years, yet many admit they don’t have the data foundation to train these systems effectively.

The solution is to treat LLM training as a mission-critical system build. With the right process, LLM training becomes an operational discipline that delivers reliable, measurable results instead of a prototype that fails in production.

What is LLM training, and why is it important?

Large language model training is the process of teaching an LLM to understand and generate human language in a way that is accurate, safe, and useful for specific business contexts. It combines broad pre-training, domain-specific fine-tuning, and alignment with human and organizational requirements, all built on high-quality, structured data and sustained through continuous updates.

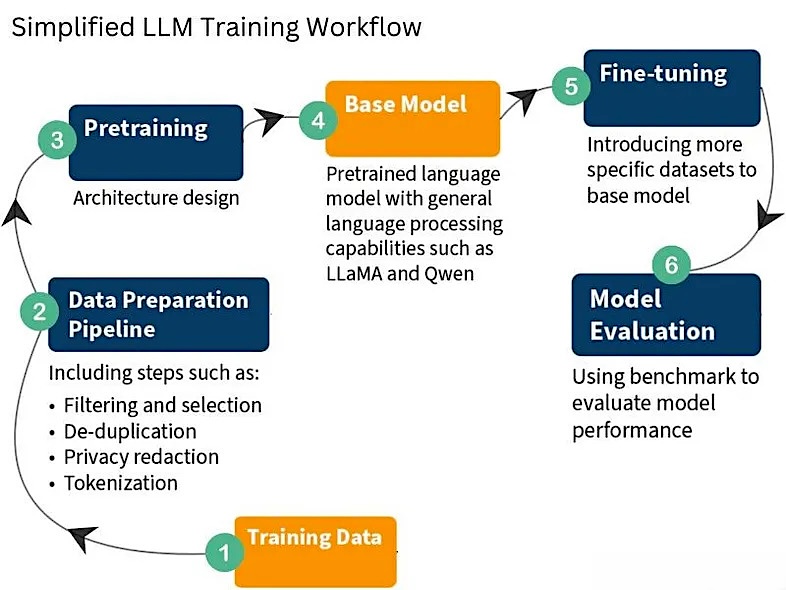

The LLM training process typically follows three stages:

- Pre-training on extensive, diverse text collections and data pipelines to provide the model with broad language understanding and general reasoning skills.

- Fine-tuning on carefully selected, domain-specific data so it can perform the tasks and use the terminology required in a particular field.

- Alignment using methods such as reinforcement learning from human feedback, preference modeling, or rule-based constraints to keep outputs accurate, safe, and practical in real-world applications.

Each stage carries distinct risks: Narrow pre-training data limits reasoning and long-context comprehension, while inadequate fine-tuning leads to domain errors such as incorrect terminology, missing compliance rules, or responses that don’t match business workflows. Weak alignment leaves gaps in safety controls, allowing unsafe, biased, or non-compliant outputs to reach end users.

These risks are compounded when teams rely on static or unstructured datasets instead of the structured, real-time data required to keep models relevant and reliable.

Benefits of LLM Training

Effective LLM training provides organizations with clear, measurable benefits:

- Higher accuracy – Models fine-tuned with carefully chosen, domain-specific data achieve stronger performance on everyday tasks and reduce the rate of incorrect responses.

- Industry fit – Training with sector-specific terminology, workflows, and compliance rules ensures outputs align with real business operations.

- Reduced errors and bias – Targeted data selection and alignment steps lower hallucination rates and help prevent biased outputs, increasing trust among end users.

- Adaptability – Ongoing training with updated data allows models to adjust to changing markets, regulatory requirements, and customer needs without constant manual fixes.

- Competitive advantage – A domain-ready model supports faster, more reliable decision-making and execution across the organization.

- Cost efficiency – Modern fine-tuning techniques reduce infrastructure and data expenses compared with full retraining, providing a more substantial return on investment without compromising model quality.

8 Steps to Success with LLM Training

Step 1: Define Clear Objectives

Defining clear objectives at the outset ensures LLM training resources are directed toward measurable business outcomes. Without shared goals, projects risk drifting into vague ambitions or wasting compute on experiments that never deliver value. Explicit objectives also create alignment across technical teams, compliance stakeholders, and business owners, so everyone agrees on what success looks like.

Turning broad aims into specific targets makes the difference between an aspirational project and one that delivers operational impact. Instead of “better customer insights,” success might mean reducing hallucination rates below 2 percent, reaching 95 percent accuracy on ticket classification, or keeping response latency under 300 milliseconds. Objectives framed this way guide training choices and provide clear checkpoints for accountability.

How to do it:

- Write a model brief outlining core tasks, KPIs (accuracy, hallucination rate, latency), compliance requirements, guardrails, and acceptance criteria for each use case.

- Prioritize 2–3 must-win use cases to focus training resources where the impact will be highest.

- Define ownership and rollback thresholds so there is clarity on who monitors metrics and when to retrain or revert.

- Align stakeholders on success criteria and establish a regular review cadence.

Step 2: Curate and Prepare High-Quality Data

High-quality data is the foundation of effective LLM training. If the training set is incomplete, outdated, or poorly prepared, the model will produce errors, miss critical terminology, or fail compliance checks. Curated, rights-cleared, and well-documented datasets ensure the model learns from information that reflects actual business use cases and customer interactions.

Preparing data requires systematic processes for cleaning, deduplication, normalization, and labeling so the inputs are accurate and consistent. Static datasets lose relevance quickly, while structured, real-time sources provide current information that keeps the model aligned with changing requirements.

How to do it:

- Build a repeatable data pipeline for collection, cleaning, normalization, deduplication, labeling, and documentation.

- Use structured, real-time sources to replace static snapshots and keep the training set current.

- Apply metadata tagging so datasets can be tracked, versioned, and audited throughout the training lifecycle.

- Validate datasets against internal quality standards and link them to compliance checks.

Step 3: Ensure Data Diversity and Fairness

Training data must represent the full range of users, scenarios, and contexts the model will encounter. Without this balance, LLMs systematically underperform for certain demographics, geographies, or languages, leading to biased outputs, compliance risks, and loss of trust in production systems.

Fairness also has to be treated as an ongoing discipline rather than a one-time step. Models that start balanced can drift as new data is introduced or as customer behavior changes. You should perform regular model audits and updates to maintain parity across groups and to ensure the LLM continues to serve all users reliably.

How to do it:

- Audit coverage with automated metrics and human review to identify gaps in demographics, geography, and use cases.

- Augment underrepresented data by collecting new samples or synthetically generating edge cases.

- Use globally sourced, real-time data from a provider like Nimble to maintain diversity across regions and prevent drift toward narrow subsets.

- Define and track fairness KPIs (e.g., performance parity across groups) and include them in routine model evaluations.

Step 4: Build Scalable Training Infrastructure

Training large language models requires systems that can grow with the workload. As models get larger and retraining becomes routine, infrastructure must be able to add capacity quickly and keep jobs running without interruption. Scalable infrastructure ensures the system can handle data parsing, transformation, and movement of large datasets without bottlenecks.

Just as important is the ability to manage and monitor this environment. Versioning, experiment tracking, and monitoring give teams visibility into how training is progressing. Rollback procedures make it possible to recover quickly if a run produces poor results. These controls turn training into a dependable process rather than one that constantly risks disruption.

How to do it:

- Use cloud or hybrid clusters with autoscaling GPUs/TPUs and high-throughput storage.

- Set up Kubernetes or a similar system to schedule distributed jobs and allocate resources efficiently.

- Apply version control with Git and DVC, and track experiments with MLflow or Weights & Biases.

- Add monitoring and alerts for model loss, system utilization, and unexpected cost spikes.

Step 5: Apply Robust Evaluation Metrics

Evaluation confirms that training results meet the objectives defined at the start and that the model performs reliably in production. Without disciplined testing, teams risk promoting models that look strong in training but fail on real tasks. Clear benchmarks make it possible to track accuracy, detect regressions, and decide when a new checkpoint is ready to deploy.

How to do it:

- Create held-out test sets that reflect real use cases, including edge cases and sensitive scenarios. Keep these sets separate from training data.

- Define measurable metrics such as accuracy, hallucination rate, latency, factual consistency, and fairness across user groups.

- Set thresholds for each metric so teams know when a model is good enough to promote.

- Automate evaluation for every new checkpoint and make results visible in dashboards.

- Refresh test sets on a regular schedule so benchmarks stay aligned with current data and usage.

Step 6: Incorporate Real-Time Data for Continuous Training

Models trained on static datasets fall behind as language, policies, and customer behavior change. Continuous training prevents this drift by introducing real-time data into the pipeline at defined intervals.

The update process must include validation, deduplication, and metadata tagging so that only clean, documented information reaches the training environment. Done consistently, this keeps models accurate, compliant, and aligned with business needs without the cost of full retraining.

How to do it:

- Schedule incremental retraining or fine-tuning to absorb new data efficiently.

- Build validation gates to check quality, remove duplicates, and tag metadata before data enters training.

- Use a platform like Nimble to deliver structured, real-time web data that reflects current language, regulations, and market conditions.

- Version data, code, and models so that every update can be traced, audited, or rolled back if needed.

Step 7: Maintain Compliance and Ethical Standards

LLM training must meet regulatory and ethical requirements at every stage. Standards such as GDPR, CCPA, HIPAA, and sector-specific data security rules govern how data is collected, documented, and used. Ignoring these requirements creates regulatory risk and erodes user trust. Embedding compliance throughout the training process ensures models can be deployed safely and withstand audits.

How to do it:

- Record the origin, license, and consent status of each dataset, and maintain immutable logs to provide a complete audit trail.

- Remove or anonymize personally identifiable information (PII) and other sensitive details before data enters training.

- Enforce role-based access controls and encrypt data both in transit and at rest.

- Use a web data platform which ensures that only public information enters the training pipeline and delivers it in a structured, compliance-first format.

- Include fairness, bias, and explainability tests in regular evaluations so ethical standards are measured and enforced.

- Version datasets and model checkpoints to maintain a verifiable chain of custody for regulators and internal reviews.

Step 8: Deploy, Monitor, and Retrain

Deployment connects a trained model to real users and workloads. To perform well in production, the model needs systems that track its behavior, surface performance trends, and update it as conditions change. Monitoring and retraining keep the model accurate, compliant, and responsive over time, turning training into a continuous process rather than a one-off effort.

How to do it:

- Containerize the model with Docker or Kubernetes and expose it through scalable APIs with load balancing and autoscaling.

- Monitor latency, throughput, error rates, and user feedback with observability tools such as Prometheus, Grafana, or Datadog.

- Run drift detection on live traffic and set thresholds that automatically trigger retraining alerts.

- Retrain or fine-tune on a schedule and in response to triggers, using Nimble as the backbone for structured, real-time data that keeps the model aligned with current language, regulations, and customer needs.

- Maintain versioned checkpoints and automated rollback procedures so underperforming models can be replaced quickly.

- Capture user feedback and error reports and feed them back into the training process to improve future iterations.

Turn LLM Training into a Continuous, Reliable Process

Building a large language model that performs consistently requires more than clever prompting. It depends on a repeatable process with clear objectives, quality data, scalable infrastructure, disciplined evaluation, and continuous retraining. The strength of this process determines whether an LLM becomes a dependable business tool or fails to deliver value.

Nimble provides the real-time, structured, and compliant data backbone that eliminates stale or fragmented sources. Its Web Search Agents and online pipelines collect public web information at scale, structure it for training, and deliver it directly into enterprise workflows. By embedding governance features such as automated tagging and compliance checks, Nimble ensures that training data remains accurate, scalable, and production-ready.

Connect with Nimble to discover how it keeps your LLM training accurate and production-ready.

FAQ

Answers to frequently asked questions

.avif)

.png)

.png)

.png)

.png)