Nimble Retriever vs. Tavily: Boosting Your LLM, RAG, Agents with Real-Time Data

We didn’t plan on launching something new.

Our team at Nimble has been building production-grade browser automation infrastructure for over three years now, powering leading teams with real-time, large-scale web data collection. But this time, we weren’t thinking about products or customers. We were thinking about our agents.

We were just trying to solve a problem.

It Started with a Wall

Like a lot of people building with LangChain, RAG pipelines, or online agents, we hit the same wall: retrievers weren’t pulling full online context.

Not even close.

Instead of the content we needed — long-form documents, detailed web pages, hard-to-reach data — we were getting summaries, snippets, meta descriptions, or worse, nothing at all. JS-rendered content? Gone. Pages behind a minor redirect? Nope. Our agents couldn’t do real work with that kind of context ceiling.

So one of our engineers started tinkering. A few weeks (and way too many scraped pages) later, we had a working internal tool.

It pulled more. It pulled deeper. It worked.

We had no intention of releasing it. But the more we used it, the more it felt like something others might need too.

What Wasn’t Working (Sound Familiar?)

Try something like: “What is the strongest LLM model?”

Most retrievers? You’ll get the title, some SEO fluff, and maybe the first paragraph — unless the content’s behind JS or spread across tabs.

At Nimble, we’re used to solving this differently. Our platform is built to extract full, live, dynamic web data. That means rendering JavaScript, dealing with scale, surviving redirects, and parsing whatever format the web throws at us.

So when we looked for a retriever that could match that level of depth and play nicely with LangChain… nothing really clicked. Most existing tools were wrappers on snippet-based APIs, not built to handle modern websites or real retrieval tasks.

We didn’t want another wrapper. We needed an enterprise-grade web retriever so we built it.

What We Built: Nimble Web Retrieval's Features

It’s not flashy. It’s not overloaded with features. It just works.

Full-Page Retrieval

Pulls actual content — not just summaries or link previews. Articles, PDFs, investor reports, government proposals, niche forum posts — the kind of context your LLM needs to stop guessing.

Link-Based Extraction

Already have a list of links from a search engine or another system? Just drop them in. It’ll retrieve full content from each URL.

JavaScript Rendering

We use headless browser drivers that mimic real user behavior. Content that loads afterwindow.onload, content in lazy-load divs, even DOM elements rendered by JS — it’s all captured.

Customizable Search

Plug in your preferred search engine (Google, Bing, Yandex) and customize it with region, language, and keyword options. No black-box sourcing — you control the SERP.

Built to Get Through

We’ve wrapped in our production-grade routing, headers, retries, and stealth mechanisms. No fragile one-shot crawlers — this thing survives and doesn’t get blocked.

LangChain Native

It’s built as a first-class retriever. Drop it in, call.invoke(), and pass structured results into your chain. It just fits.

How Nimble's Web Retrieval Work?

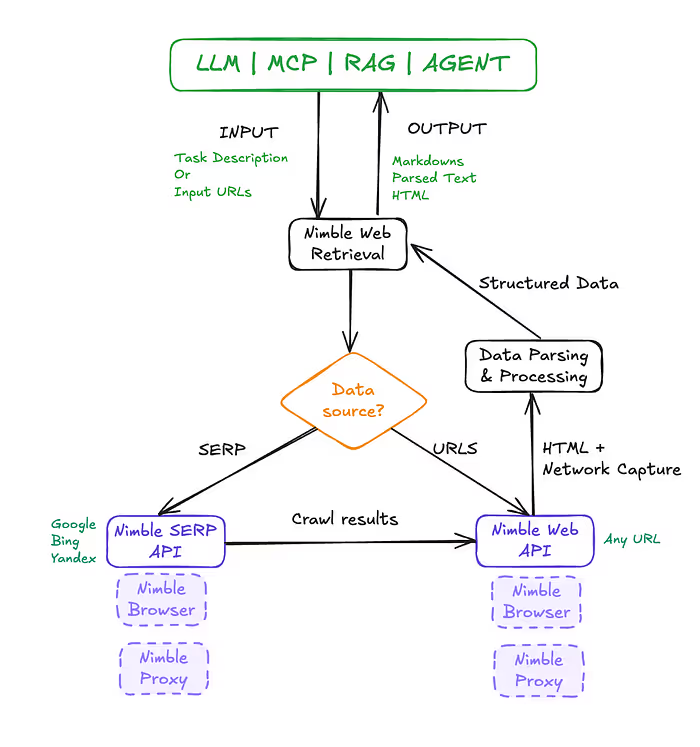

1. Receive Task

AI app (MCP, LLM, RAG, or Agent) sends a task to Nimble Web Retrieval. For example: "Find recent product reviews for XYZ"along with optional URLs or domains if you already know where to look.

2. Decide Data Source

Provided URLs? → Use them directly. No URLs? → Use a search engine to discover relevant links and extract data from them.

- Use Search Engine (SERP API): If no URLs, search Google/Bing/Yandex and extract result page links, then use the Web API to extract data from them.

- Use Direct URLs (Web API): If URLs are given (or extracted from SERP API), go directly to them using Nimble Web API.

3. Data Extraction

The Web and SERP APIs use Nimble Browser and AI Proxy to load pages, capture HTML and network data (XHR calls, AJAX, etc.). This ensures deep access to both static and dynamic content.

4. Parse & Structure Data

Extract and structure the data from raw HTML content. The data is then cleaned up and converted into structured formats like parsed text, markdown, or HTML.

5. Return to AI App

The structured data is sent back to your MCP / LLM / Agent, ready to be used in workflows like answer generation or decision-making.

Real Example: LangChain Setup

#%pip install -qU langchain-nimble langchain-openai langchain

from langchain_nimble import NimbleSearchRetriever

from langchain.chains import RetrievalQA

from langchain_openai import ChatOpenAI

import os

os.environ["OPENAI_API_KEY"] = "your-key"

os.environ["NIMBLE_API_KEY"] = "your-key"

retriever = NimbleSearchRetriever(k=5, render=True)

llm = ChatOpenAI(model="gpt-4o", temperature=0)

chain = RetrievalQA.from_chain_type(llm=llm, retriever=retriever)

response = chain.invoke("How many employees does NimbleWay have?")

print(response['result'])Tavily vs Nimble: A Quick Look

We don’t mean to throw shade. Tavily is great for lightweight search — but if you’re doing anything beyond a demo, here’s what you’ll notice:

Prompt #1

Prompt: “How many employees does NimbleWay have?”

Tavily:

Approximately 29 employees.

(Wrong answer)

Nimble Retriever:

As of January 2025, NimbleWay has approximately 127 employees.

(Correct — pulled from a dynamically rendered investor report)

Prompt #2

Prompt: “What are Amazon’s best-selling products in 2025?”

Tavily:

Amazon Best Sellers is a list that compiles real-time data on shoppers’ top purchases across various categories like beauty, tech, home, and more. It showcases the most popular products at any given moment, helping customers discover trending items. The list includes a wide range of products, from electronics and clothing to health and household items. Sellers can use this information to identify high-demand products and make informed decisions about what to sell online.

Nimble Retriever:

Based on the information provided, some of the best-selling products on Amazon in February 2025 include items like printer paper, Apple iPads, Apple Watches, MacBook Air laptops, Nespresso coffee pods, protein shakes, baby diapers, vacuum cleaners, energy drinks, and various health and wellness products. These products are popular due to their quality, brand recognition, and customer demand. To get more detailed insights into the best-selling products on Amazon, you can use tools like Helium 10 to analyze sales data and trends.

(All sourced from the real live bestseller page, parsed and linked)

Tavily vs Nimble: Summary

In the LangChain integration, both Nimble and Tavily retrieve information, but Nimble delivers more accurate, actionable, and richer data.

- Nimble Output: In the first example, Nimble provides specific product names(Apple iPads, MacBook Air, Nespresso coffee pods), market insights, and tool recommendations (Helium 10 for sales analysis). In the second example, Nimble provides an accurate and relevant answer.

- Tavily Output: In the first example, Tavily returns a general description of Amazon’s Best Sellers list, mentioning broad categories (tech, beauty, home) but lacking concrete details. In the second example, Tavily provides an inaccurate answer.

Web Retrieval Use Cases at Nimble

Here’s where our internal teams are already using the retriever daily:

Data Owners

- RAG Pipelines - Pulling full-page data from complex sites, giving LLMs the context they need to answer technical, legal, or multi-hop questions.

- MCP & Agents - Feeding long-form documents into multi-agent chains that collaborate across search, context, and summarization.

Business Owners

- Market & Competitive Intelligence - Analyzing current pricing, offer pages, reviews, and investor reports — even if they’re behind JS.

- Sentiment Tracking - Collecting full Reddit or X threads, including dynamically loaded posts, quotes, and nested replies.

Why Nimble's Web Retrieval is Different (And Built to Last)

This isn’t just a tool we built for fun.

Nimble is a web data collection platform running in production at a massive scale. Our core products - proxy networks, headless browsers, dynamic scraping APIs — are used by hundreds of customers, including some of the largest AI and analytics platforms in the world.

We didn’t throw this retriever together as a side project. We built it with the same tech stack, infrastructure, and resilience we use for our paying customers — and we built it because we needed it.

That’s why it handles edge cases better. That’s why it scales. That’s why it works on real-world websites, not just sanitized demos.

What This Isn’t

- This isn’t a Tavily clone.

- It’s not a scraped-together wrapper.

- It’s not something we’ll abandon in six months.

- It’s a production-ready retriever for LangChain and agent-based workflows — built by the same team that runs high-scale web extraction systems for customers in finance, retail, compliance, and AI.

- It’s a first release, and we’re still improving it. But it’s real. It’s solid. It’s working right now.

What’s Next?

We’re planning to release more tools like this things we built for ourselves that might make your workflows smoother, your agents smarter, and your context deeper.

If you’re building with LangChain, RAG, or MCP and need something that just works - give it a try.

Because we believe the web is the world’s most valuable dataset - and your LLM deserves more than just a snippet.

Ready to level up your AI? Try Nimble, integrate it into your LangChain and AI workflows, and experience more accurate, real-time results for your LLM and RAG applications.

FAQ

Answers to frequently asked questions

.avif)

%20(1).webp)

.png)

.webp)

.png)