Everything in, Everything out

Everything in, Everything out

Data tools and infrastructure have come a long way since the early 2000s, and in the age of the modern data stack, data and analytics tools are on the way to full democratization. The cloud revolution allows businesses to operate an incredibly robust data fabric, reduce costs, and minimize friction. The data itself takes center stage, with integration and accessibility tools that help businesses analyze ever-growing quantities of internal data. But those who notice the elephant in the room stand to gain from an immense opportunity - unstructured external data.

Prologue

In some ways, my fascination with the topic of our current data revolution goes back to the late 90s when a story by Bridgewater's Ray Dario caught my eye. I was young when I read about the environment and a decision-making framework where options are weighed in proportion to their data merits. The early use of Big Data, machine learning, and algorithmic programming techniques ignited my imagination of how humans can make more informed decisions based on an amount of data that, until recently, was inconceivable.

My eureka moment happened when I realized the opportunity of the coming “digital revolution”, and that Ray’s story was just an early sign [1]. Fast-forward about three decades, and we’re suddenly very close to “automated” decision-making, allowing us to dream much bigger. Leveraging our natural intuition and creativity, along with actionable insights based on real-time data from various resources, is a game-changer across industries.

In the near future, the hunt for improved insights will move past technical limitations, and those who recognize that our efforts need to be refocused on the data itself stand to gain from an immense opportunity. We’ve made huge progress with the technology surrounding data and making companies’ internal data accessible. However, we haven’t yet structured the staggering majority of the relevant data, and we’re missing a treasure trove that’s right in front of us - publicly available external web data.

“Your company's biggest database isn't your transaction, CRM, ERP or other internal database. Rather it's the Web itself…” - Doug Laney, Gartner, 2015

The transition: modern data stack

Around 2005, we realized how much data is generated through Yahoo, Facebook, YouTube, and other online services. The existing generation of RDBMS systems was incapable of storing, processing, or querying the mass of data that enterprises were starting to amass.

In 2004, Google published the MapReduce paper, which laid the foundation for the early Big Data systems that followed over the next few years - most notably Hadoop [2][3]. Hadoop gave organizations (famously Yahoo and LinkedIn) the ability to store and explore large amounts of data for the first time.

Whether you like it or not, you’ve probably used Facebook Messenger. Over 100 billion messages are sent on the messaging service every day by over 1.3 billion active users. When Facebook decided to enter the messaging app market, it wanted to go big. Their idea was to create a next-level messaging system that would allow users to interact using email, IM, SMS, on-site Facebook messages, and more, in real-time. Facebook’s engineers knew their existing infrastructure wouldn’t cut it, and after extensive testing, they settled on Hadoop as the solution.

Hadoop was very primitive when viewed through the prism of the tools available today, and AI was years away from being operational, let alone common. However, the writing was on the wall, and the valuable future of data was already being recognized. In 2006, British mathematician Clive Humby coined a phrase that quickly became cliché:

“Data is the new oil” [7]

People started discussing how “Big Data” makes it possible to gain more complete answers by taking advantage of the newly-accessible information. We started building technology to come up with answers more confidently by basing them on data—which heralded a completely new approach to tackling problems.

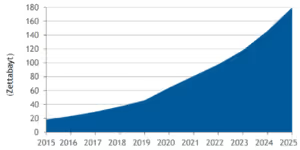

http://www.researchgate.net/figure/DX-and-the-Data-Explosion-Growth-of-Global-DataSphere-Note-The-CAGR-is-26-between-2015_fig1_358104680

A few years after the advent of early Big Data tech, Fortune 1000 companies turned their attention to AI as well. In 2012, Amazon released Redshift, triggering a Cambrian Explosion of development that produced many of the tools still being used to this day [8]. The low price, ultra-high performance, and flexibility of Redshift symbolized the rising tide of cloud computing, and previous-generation monoliths like Hadoop simply could not compete in cost, performance, or flexibility.

The three trends of cloud migration, diversification, and advancement have continued, leading to the self-service modern data stack/platforms.

2022: The data stack isn’t hype - it’s a core business function

The rise of the Data Cloud and the popularity of Software as a Service (SaaS) products in the market provide a tremendous opportunity, not only for data-driven businesses but for every business.

Organizations realized they can focus on platforms that provide direct business value from day one, are easy to use, and require near-zero administration. Cloud data warehouses provide incredible capabilities, and DataOps automation is getting closer and closer to reality [12]. Automation and cost-reduction are now core features of data tools and managed services.

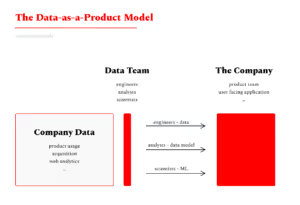

Adopting a modern data stack provides many benefits. The ease of use and minimized integration mean anyone can be a data engineer, data analyst, or machine learning engineer. Data and analytics are more accessible than ever before.

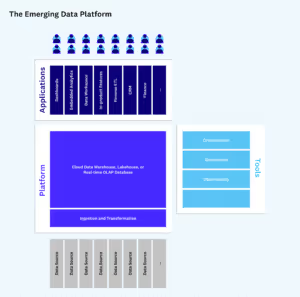

Cloud computing platforms offer all the scalability and compute muscle any organization could want, and the market is saturated with tools to handle any phase of the data chain from collection, ingestion, & storage through transformation, modeling, analytics, and output.

Competitive markets exist for each of these domains, with billion-dollar companies hyper-focused on innovating and dominating these respective spaces. Open-sourced stacks are quickly picking up dedicated fanbases along with great companies that offer managed services.

http://future.com/emerging-architectures-modern-data-infrastructure/

The coming transition: analytics are more accessible than ever

Data tools have also become more diverse and specialized, and stacks are often tailored for client companies individually [9][10]; from integration, event tracking, transformation, artificial intelligence, analytics, reverse ETL, data catalog and observability, data governance, and more.

The modern data stack is still growing and evolving rapidly, and the list is only getting longer as new categories emerge such as the metrics layer, real-time/streaming (Materialize, Decodable, Meroxa), and predictive analytics. These emerging fields are reducing the technical barriers to entry and helping analysts use AI and ML to deliver fast, comprehensive, and actionable insights (Sisu Data, pecan.ai).

Have you ever heard of the traveling salesman problem? So have the folks at UPS. Their solution was to reduce left turns as much as possible, down to just 10% of all turns made by UPS trucks. Why? Because UPS took a long, hard look at weather, truck, traffic, and other related external data, and realized that the shortest route to a delivery address wasn’t always the best route. In fact, thanks to this policy, UPS saves roughly 10 million gallons of fuel and delivers 350,000 more packages every year [11]. Amazing!

Worldwide access to a great data stack has changed the business landscape. However, maintaining a competitive edge not only depends on knowing how to manage, process, and analyze, it also depends on the data itself.

The limitation: garbage in, garbage out

There is a massive asymmetry between the brainpower invested in technical solutions to data vs. identifying and accessing the right data.

90% of all existing worldwide data has been generated in the last two years [13], and only 2% of that data has been analyzed, while over 80% of it is unstructured and inaccessible [14] (many thanks to Young Sohn for sharing these amazing statistics with me).

The industry’s focus will soon shift toward importing, structuring, and assimilating external data. This new objective has the potential to revolutionize business intelligence as the quality, quantity, and diversity of insights multiply with the introduction of external data into the robust modern data stack.

Data marketplaces don’t address the immense volume of inaccessible data due to low update speeds and niche targeting. Data-sharing companies (oceanprotocol.com) have also attempted to create a shared marketplace for data, but they have hardly scratched the surface. The biggest database in the world is clearly the internet, but it’s unstructured, which makes it inaccessible to use across organizations.

The biggest companies already understand the power of external data in their decision-making. For example, McDonald’s data-driven growth strategy substantially accelerated its expansion to 34,000 local restaurants [15].

Coca-Cola has long been a pioneer in Big Data analysis and analytics and has used external data to achieve a myriad of goals including creating new products (Cherry Sprite!), gauging environmental factors to ensure their signature flavors remain consistent and monitoring social media by using AI image recognition to understand and personalize the ad experience served to individual users [16].

Netflix used predictive data analytics to make creative decisions and green-light $100+ million dollar projects such as the incredibly successful House of cards and Bird Box [17]. These are just a few examples of what happens when companies utilize the tip of the external data iceberg.

A new era of data collection is coming.

External data pipelines will launch a monumental shift. This technology isn’t some database that will provide faster performance, a visualization tool that will give executives more freedom, or an incremental update that will make your data team’s job easier or save your company money.

When you need to answer a question, you turn to Google, but when your business needs to conduct “Big Data” research, it requires hours of manual work. Imagine for example an analyst at Coca-Cola who wants to know how prices have changed over the last six months at all of their major competitors.

What if this query was accessible:

SELECT `price` FROM `allMyCompetitors` WHERE `market` = 'myMarket' AND `product` = 'myProduct';

This is the promise of external data. It will enable you to quickly receive a real-time result of all the prices of all the products competing with your product in your target market. You’ll easily discover how many positive and negative reviews have been posted regarding your service across the web, with full metadata including publishing time, location, author demographics, and more. Imagine what your AI models would look like if they had access to transcriptions for any YouTube video ever made!

The volume of relevant data in the digital space is unfathomable, and it's exciting to imagine how public external web data would be useful across industries. This volume also reveals useful insight into what solutions will be the most effective. In the past, SaaS models provided excellent solutions to focused and well-defined problems. External data, by its very definition, requires flexibility, dynamism, and rapid adaptation, traits that are not adequately fulfilled by SaaS models that can be rigid, resource intensive, and lock the user into a fixed set of functions.

Many of the leading data tools that make up the MDS have recognized these limitations and made the transition from SaaS to PaaS models. External data needs to do the same. A flexible, real-time, on-demand PaaS solution, that intelligently adapts data from any source, would allow data teams and analysts to quickly pull in new data as it becomes relevant, find correlations and relationships between various data sets, and discover new applications that could never be imagined in advance.

We’re still in the early stages of defining the analytical and operational data platform, and the coming integration of external data into the modern data stack will supercharge and transform the next generation of AI, analytics, and BI. We can expect massive disruptions on the horizon. The ability to combine multiple data sources, in real-time, on-demand, with the business context to gain a complete market understanding will not just generate more revenues for businesses in the short term, it will enable a new generation of insights that I, as a boy, could hardly dream of. I guess Ray Dario saw it coming.

PS:

Data teams now have more tools, resources, and organizational momentum behind them than at (probably) any point since the invention of the database, and given the huge potential these developments promise, I hope to see ambitious engineers, founders, and initiatives move into this space and bring the same level of innovation and ingenuity we’ve come to expect from the data-sphere. Let's democratize data gathering!

[1] - http://www.amazon.com/Principles-Life-Work-Ray-Dalio/dp/1501124021/ref=nodl_?dplnkId=df1c9b78-6ac5-4b7f-8acd-35fef8db2733

[2] - http://medium.com/@markobonaci/the-history-of-hadoop-68984a11704

[3] - http://www.itproportal.com/features/the-data-processing-evolution-a-potted-history/

[4] - http://www.theregister.com/2010/12/17/facebook_messages_tech/

[5] - http://www.wired.com/2011/10/how-yahoo-spawned-hadoop/

[6] - http://www.geeksforgeeks.org/hadoop-history-or-evolution/?ref=lbp

[7] - http://comsocsrcc.com/data-is-the-new-oil/

[8] - http://www.getdbt.com/blog/future-of-the-modern-data-stack/

[9] - http://future.com/wp-content/uploads/2020/10/Blueprint-2_-Multimodal-Data-Processing-1.png

[10] - http://future.com/emerging-architectures-modern-data-infrastructure/

[11] - http://www.ge.com/news/reports/ups-drivers-dont-turn-left-probably-shouldnt-either

[12] - http://www.gartner.com/smarterwithgartner/gartner-top-10-data-and-analytics-trends-for-2021

[13] - http://www.sciencedaily.com/releases/2013/05/130522085217.htm

[14] - http://deep-talk.medium.com/80-of-the-worlds-data-is-unstructured-7278e2ba6b73

[15] - http://yourshortlist.com/how-bi-and-big-data-is-essential-to-mcdonald-s-growth-strategy/

[16] http://www.forbes.com/sites/bernardmarr/2017/09/18/the-amazing-ways-coca-cola-uses-artificial-intelligence-ai-and-big-data-to-drive-success/

[17] http://www.forbes.com/sites/jonmarkman/2019/02/25/netflix-harnesses-big-data-to-profit-from-your-tastes/

FAQ

Answers to frequently asked questions

.avif)

.avif)

.avif)

.png)